The demand for cutting-edge chips in the Artificial Intelligence (AI) realm has surged, particularly with the boom in generative AI. These chips, with their potent computing prowess, facilitate the seamless training of large language models, propelling the AI industry forward.

In this dynamic landscape, the cloud trio of Amazon (AMZN), Microsoft (MSFT), and Alphabet (GOOGL), along with social media giant Meta Platforms (META), are making significant strides by introducing their tailored AI-specific chips. This move signifies a shift towards self-sufficiency in the intensifying AI competition, enabling them to advance AI model development and innovation rapidly.

The Impending Challenge for NVIDIA

NVIDIA (NVDA), currently holding a commanding position in the AI chips market with over 90% share and a Zacks Rank of #1 (Strong Buy), faces a looming threat from the in-house chip development strategies embraced by these tech behemoths. The advent of custom chip production by major customers may intensify competition for NVIDIA, potentially leading to a decline in its market share.

Moreover, delays in delivering its flagship AI chips due to waiting times of months have caused scarcity issues for NVIDIA. The company’s dependency on Taiwan Semiconductor for chip assembly limits its production capacity, prompting AI giants to venture into producing their chips.

Although the chips developed by Google, Microsoft, Meta, and Amazon may not match NVIDIA’s capabilities, their customizability presents a significant advantage. This customization not only reduces costs but also accelerates deployment timelines, posing a formidable challenge to NVIDIA’s market dominance.

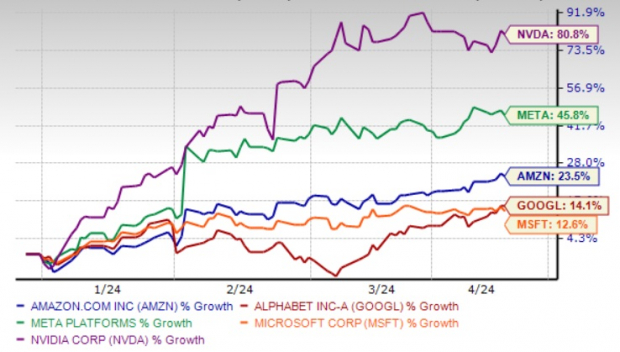

Year-to-Date Performance Comparison

Image Source: Zacks Investment Research

Race Among GOOGL, META, MSFT & AMZN in AI Chip Development

Alphabet’s Google shook the AI sector by unveiling its custom central processing unit (CPU), Axion, designed to bolster AI operations in data centers. Axion processors, the company’s first Arm-based CPUs, promise enhanced performance and energy efficiency, offering a 30% performance boost over standard Arm-based instances.

Meta Platforms joined the AI chip race with the Meta Training and Inference Accelerator (MTIA), a family of specialized chips tailored for Meta’s AI workloads. MTIA, a significant upgrade over MTIA v1, amplifies compute and memory bandwidth, enabling advanced AI research and product development.

Microsoft introduced Maia 100 and Cobalt 100 chips, with Maia 100 designed for AI tasks and the Arm-based Cobalt 100 dedicated to general computing. These chips, with Maia 100 featuring a 5-nanometer process and 105 billion transistors, have been deployed in cloud AI applications.

Amazon revealed AWS Trainium2 chips for training and running AI models, offering superior performance and energy efficiency over the previous generation. These chips, capable of training massive language models quickly and cost-effectively, demonstrate Amazon’s commitment to AI innovation.